While designing the new framework, it was established that, on the scalability/availability front, one of the goals was to achieve process isolation to avoid the problems the NEXT.API suffered from. This process was slow and inconvenient and led to the current iteration of the Netflix framework, one in which scalability and availability, and developer productivity are taken into account. To test a script, the team had to upload it to a test site, run it, test it, and, if there were any problems, go through the whole process again after troubleshooting the issues.

#Netflix client ui javascript software

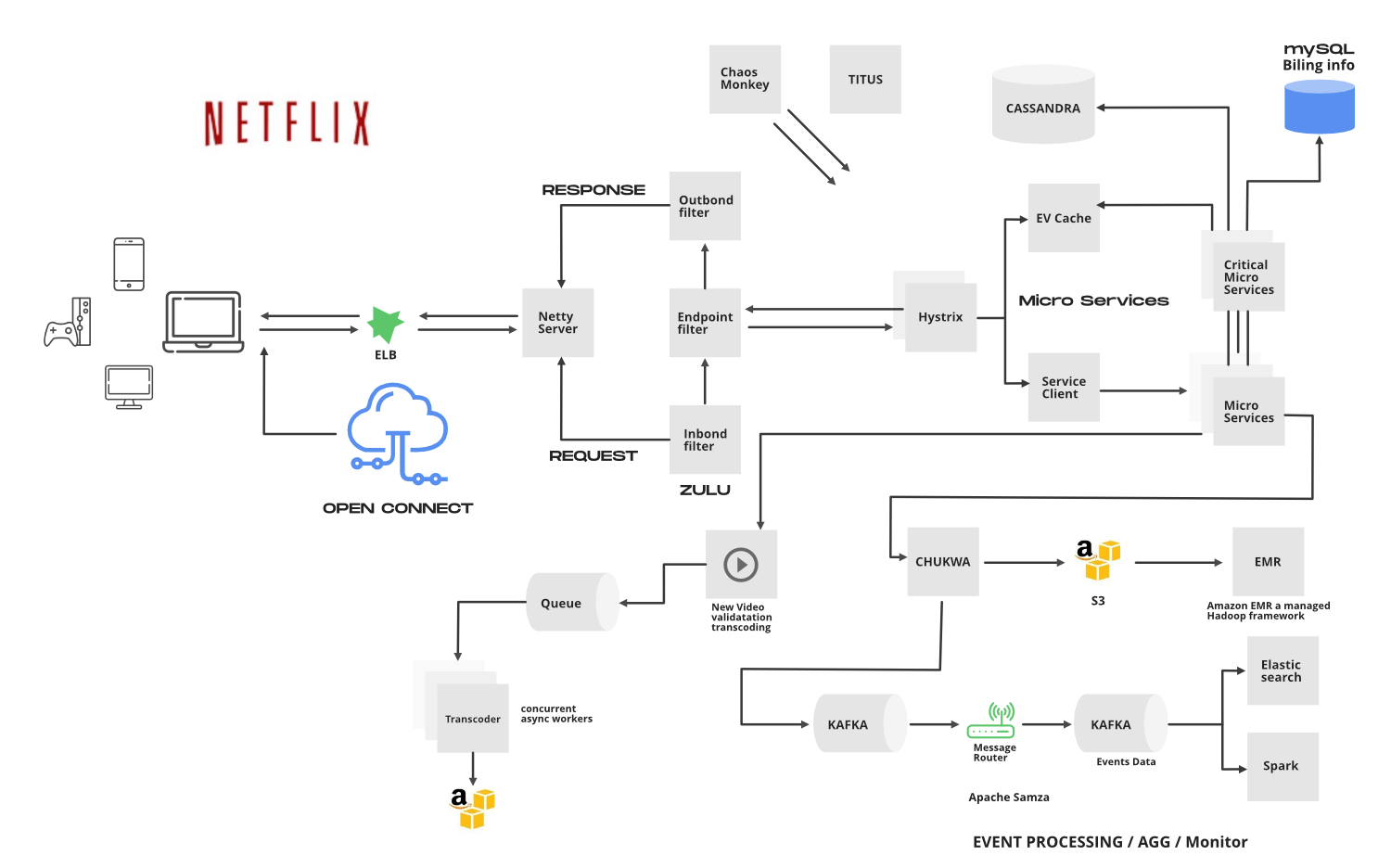

Another thing that even led to outages were errors in the scripts themselves: If a script had a memory leak, for example, it could bring down the system for everyone.Īnother problem was what Xiao calls “Developer Ergonomics.” The NEXT.API server was a very complex piece of software with multiple moving parts. This led to expensive upgrades when more resources were needed. It was common, says, Xiao, to “run out of headspace,” be that memory, CPU, or I/O bandwidth. Netflix has literally thousands of scripts sharing the same space, serving millions of clients. The problem was the Monolith was back again, and that led to scaling problems. The API service itself could also be updated independently from the APIs that it was serving. The teams could change the scripts (written in Groovy) as much as they liked without affecting other teams. The API.NEXT allowed each team to upload their own custom APIs to the servers. Another evolution of the Netflix framework was required. The different developer teams needed flexibility to innovate for the platforms they were supporting, and the resulting REST API was too clunky and restrictive for this. Ultimately, it proved difficult to maintain, because the API became more complex and bloated as developers tried to retrofit it with more features. So, in order to, for example, fetch all of a customer’s favorite movies, the services had to make multiple round trips to the back end.

REST is resource based and every little element on the Netflix UI is a resource.

Also, as a different team owned the REST API, the microservices teams were often waiting weeks for API changes to support their own new services. For one it was inflexible, as it was originally designed for one kind of device and adding new devices was painful. However, the REST API also came with its fair share of disadvantages. The new framework also separated the rendering of the UI and the accessing of data processes. This unlocked the ability to support more devices. So in the next iteration, the development team migrated to a REST API. Furthermore, increasing the number of supported devices was nearly impossible in any practical sense. When a new microservice was added to the existing ones, they had to push the service. If one of the development teams launched a new and improved version of a client, they had to push the service. Every time a new show launched and they wanted to add a new roll title to the UI, they had to push the service. To begin with, it was very slow to push and innovate. The Java server - the monolith in this story - suffered from several issues. For each microservice there is a team of developers that more or less owns the service and provides a client to the Java server to use.

Netflix relies on microservices to provide a diverse range of features. The server did more or less everything, both rendering the UI and accessing the data. In its first iteration, Netflix only worked on the browsers, and the framework was simply a Java web server that managed everything. As the number of users increased, so did the number of platforms they had to deliver to.

#Netflix client ui javascript tv

But somehow, Xiao says, that didn’t mean that once the migration complete, the developers could “just sit around and watch TV shows.” The cloud, after all, is just somebody else’s computer, and scaling for the number of users is just part of the problem. One of the first steps Netflix took to cope with their swelling subscriber base was to migrate all their infrastructure to the cloud. He also looks at how this led to radically modifying their delivery framework to make it more flexible and resilient. In his talk, Yunong Xiao, Principal Software Engineer at Netflix, describes these challenges and explains how the company went from delivering content to a global audience on an ever-growing number of platforms, to supporting all modern browsers, gaming consoles, smart TVs, and beyond. The growing number of Netflix subscribers - nearing 85 million at the time of this Node.js Interactive talk - has generated a number of scaling challenges for the company.

0 kommentar(er)

0 kommentar(er)